Explainable AI for Forecasts you can Trust

Last update: September 24, 2024

Overview

For enterprises, having the ability to understand, interpret, and trust recommendations from AI forecasting solutions is paramount. While classical time series forecasting methods inherently offered interpretability, newer approaches based on neural networks and foundational models have prioritized raw performance over explainability. This shift has given rise to the “black box” problem that plagues many AI systems.

Addressing this challenge, Ikigai’s aiCast forecasting solution reveals the drivers behind forecast outputs through a full decomposition and analysis of time series data. This paper will highlight how Ikigai’s aiCast bridges the gap between advanced AI capabilities and the critical need for interpretable results with a deep understanding of time series analysis.

For enterprises, having the ability to understand, interpret, and trust recommendations from AI forecasting solutions is paramount.

Introduction

Imagine if you could run a forecast and instantly understand what has changed against any point in the past. What differs today from the same day last year? What factors are driving these changes? Is it due to a new promotion, a recent product launch, or shifting market dynamics? How much do seasonality and holidays factor into outcomes on any given day? And how do promotions impact sales across the entire product catalog?

These questions are just the tip of the iceberg for business users leveraging AI forecasting solutions.

The field of forecasting has made significant strides in recent years, propelled by advancements in machine learning and AI capabilities. However, the advent of large models based on neural networks - including foundation models - have introduced a trade-off: while computational prowess has increased, it has been at the cost of explainability.

Explainable AI (XAI) refers to a set of processes and methods that allow human users to comprehend and trust the results and outputs created by machine learning algorithms. XAI techniques can be applied at various stages of the machine learning lifecycle to provide transparency and insight into model behavior. For example:

- Pre-modeling: Analyzing training data to identify potential biases or limitation

- During-modeling: Building interpretability directly into the model architecture

- Post-modeling: Generating explanations of model outputs after training

Explainable AI (XAI) refers to a set of processes and methods that allow human users to comprehend and trust the results and outputs created by machine learning algorithms.

Why explainability matters

While there are a few open source and proprietary models that can help with forecasting, the lack of explainability in these systems should raise significant concerns for data science teams and business stakeholders alike.

- Explainability drives business buy-in

Ultimately, explainability makes it easier to communicate the rationale behind different forecasting outcomes to stakeholders, which increases confidence in the outputs and resulting strategies. Moreover, explainability can reveal biases or limitations in the model, allowing planners to account for these factors when developing scenarios and interpreting results.

- Explainability is part of good AI governance

When a business relies on AI outputs for critical decisions that impact employees and customers, it’s necessary to understand why those decisions were made. This is also crucial for audit trails. For example, consider a bank using AI to predict loan default risk. If the AI system flags higher risk for an applicant, explainable AI can provide an audit trail showing how that applicant’s credit history and income trends compare to past default cases. This helps lenders understand the AI’s reasoning, demonstrates objective decision-making for compliance reviews, and enables monitoring for fairness over time.

- Explainability enables AI predictions to be used for scenario planning

Explainable AI models play a crucial role in scenario planning by revealing the relative importance of inputs to the outcome(s). This transparency forms the foundation for exploring possible strategic actions alongside the potential consequences of decisions – core to effective scenario planning. By identifying the factors that most significantly influence results, planners can create meaningful scenarios and pinpoint where interventions might be most effective.

Ikigai: Explainable forecasts through advanced time series techniques

Ikigai is an AI platform designed to transform how businesses handle internal and external time series data, taking it from disparate spreadsheets and business systems, and turning it into actionable insights. Built on a foundation of large graphical models, aiCastTM is a multivariate forecasting solution that generates meaningful predictions from enterprise and external time series data.

aiCast delivers vast performance benefits against legacy approaches while maintaining the explainability that users need to trust and act on the platform’s insights. With real time understanding of how forecasts are derived and what contributes to the forecast, users can comprehend the reasoning behind each prediction or recommendation. At every level of the forecast, users can drill down into the specifics of how each output was generated, guaranteeing high degrees of explainability.

Ikigai's three-part approach to explainable forecasting through time series analysis

The core of Ikigai’s approach to deliver XAI lies in its ability to provide a deeper understanding of time series data.

In an enterprise setting, decision-making is often driven by tabular, timestamped data, referred to as time series data. There are nearly unlimited examples of time series data in a business, including customer signups, employee growth, promotion outcomes, machine performance, YoY revenue, and more. Comprehending the behavior of time series data is crucial for accurate forecasting. Ikigai takes a three-part approach to ensure that forecasts are always easy to explain: understanding the data, explainable forecast generation, and forecast decomposition and visualization.

1. Understanding the Data

A critical step in forecasting is gaining a deep understanding of relevant time series datasets before the forecasts are generated. With robust autoML, the Ikigai system supports data science teams in automatically preparing the data for use in aiCast through the following mechanisms:

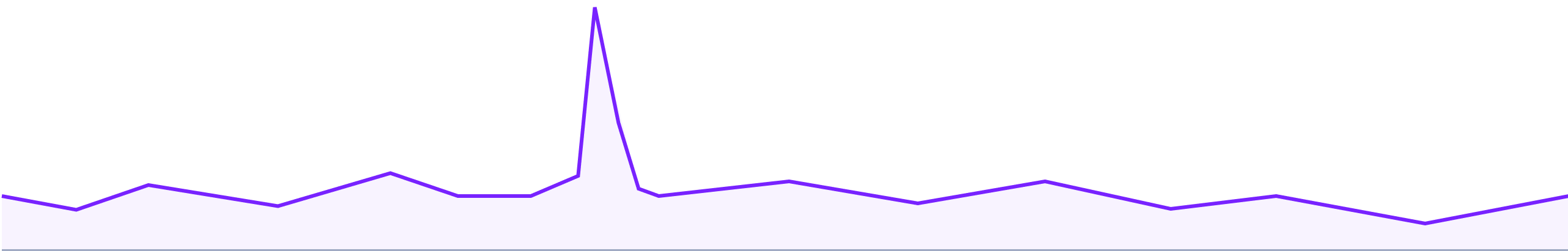

Anomaly detection

Unusual data points are flagged for review. This allows data scientists to identify and address any data quality issues – such as outliers or inconsistencies -- that may impact the accuracy of predictions. This step also creates an opportunity for data scientists to engage with business stakeholders to better understand the business context of the time series. For example, what appears to be an anomaly in sales numbers may actually be related to a major promotion that will be repeated in the coming year and needs to be accounted for.

Imputation

This includes accounting for, or “imputing” missing values by probabilistically generating what the missing values could be. For example, in sales forecasting, missed sales values could be imputed based on inventory data.

.png)

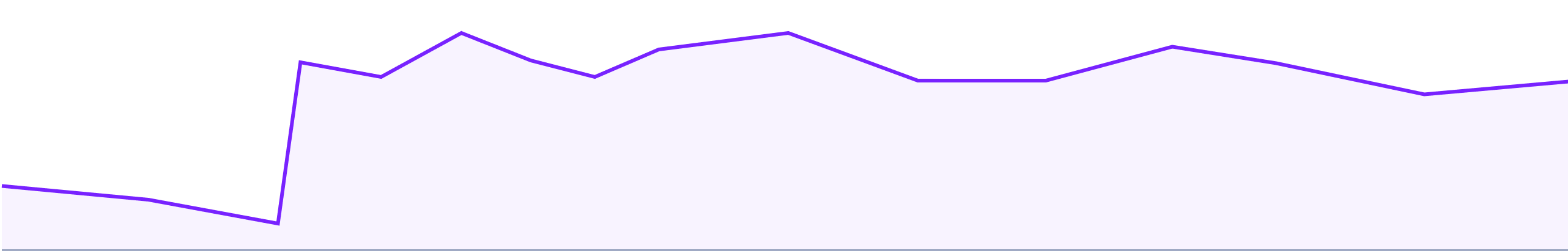

Change Point detection

This step identifies significant shifts in data patterns (for example, buyer behavior pre- and post- COVID.) This ensures that forecasting models are leveraging the most relevant and up-to-date data while ignoring irrelevant information.

Decomposition

This includes a breakdown of each time series into its constituent components:

Trend: Trends represent long-term patterns of the time series, and can capture the overall increase, decrease, or stability of the data over time. Examples include steady growth in sales over the years, or a gradual decline in product demand.

Seasonality: Seasonality refers to the repeating patterns or cycles within the time series that occur at fixed intervals, such as daily, weekly, monthly, or yearly. These patterns are usually influenced by factors such as weather or standard business cycle fluctuations. For example, an energy company may show seasonality with higher revenues through peak summer season.

Recency: Recency refers to the importance of recent data points in predicting future values. This autoregressive approach recognizes that in many time series, the most recent observations have the strongest influence on upcoming values. Identifying how much weight to give to the most recent data points helps create more accurate short-term forecasts, particularly in rapidly changing environments where older data may be less relevant.

Noise: In any time series, some amount of the data will be unexplained by trends or seasonal patterns. This is expected and normal.

Embeddings and cohorts

A key differentiator of the Ikigai platform is its innovative application of the machine learning technique, Time2Vec. Analogous to the Word2Vec technique for uncovering semantics of language, Time2Vec reduces time series datasets to vectors, exposing intrinsic qualities and attributes for more effective analysis.

With the time series embeddings enabled by Time2Vec, data scientists can uncover patterns and behaviors across related time series, revealing insights that might be obscured when examining individual time series in isolation. Furthermore, the embeddings generated by Time2Vec serve as a foundation for advanced analytical techniques, such as performing clustering analysis, or defining cohorts based on shared temporal characteristics. This capability is particularly powerful for uncovering hidden relationships and structures within complex time-dependent data.

These data understanding techniques form an integral part of Ikigai’s data validation and exploration process, empowering data scientists to develop a comprehensive understanding of the time series data before proceeding with forecasting. While many teams may employ some of these techniques today, Ikigai makes it easy to perform these techniques rapidly, and out-of-the-box.

2. Explainable forecast generation

Once the data has been thoroughly understood and pre-processed via the Ikigai platform, aiCast generates explainable forecasts using a combination of advanced machine learning techniques and intuitive inputs. The key inputs to the forecasting model include:

Primary time series data: The main time series dataset that needs to be forecasted, such as sales figures, website traffic, or sensor readings.

Auxiliary data (Covariates): External data that can influence the primary time series, such as holidays, promotions, weather conditions, or economic indicators. aiCast incorporates this auxiliary data to capture the impact of external factors on the forecast.

Previously detected change points and anomalies: The changepoints and anomalies identified during the data understanding phase can be included as inputs to the forecasting model. This allows aiCast to account for significant shifts and outliers in the data when generating forecasts.

Cohorts based on time series embeddings: The cohorts derived from time series embeddings can be used as inputs to the forecasting model, enabling it to capture common patterns and behaviors across similar timeseries.

aiCast provides the flexibility to include or exclude specific inputs when generating forecasts. For example, data scientists can choose to generate forecasts with or without the detected anomalies, depending on their relevance and impact on the forecast.

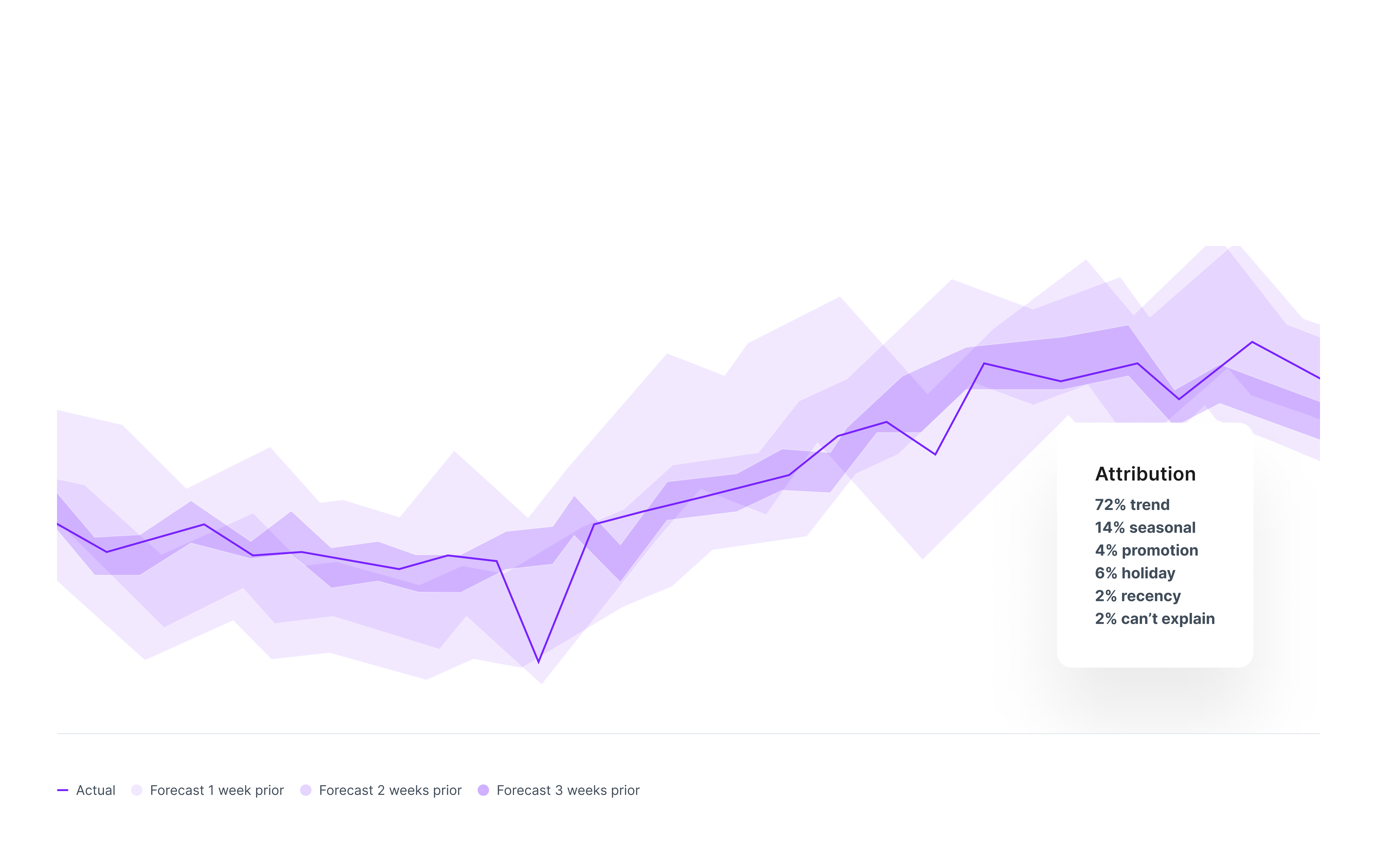

3. Forecast decomposition and visualization

One of the key features of aiCast that enables explainable forecasting is its ability to decompose the forecast into interpretable components. By breaking down the forecast into its constituent parts, aiCast provides data scientists and business stakeholders with a clear understanding of the factors driving predictions. Within aiCast, it's possible to attribute outcomes to trends, seasonality, recency, or auxiliary data.

aiCast provides intuitive visualizations that showcase the contribution of each component to the overall forecast, making it easy to communicate the drivers behind the predictions to business stakeholders.

Using Time2Vec for "similar trend" based validation

An additional application of Time2Vec comes into focus for validation. Not only does this advanced embedding approach enhance forecasting capabilities, but it also helps validate predictions through "similar trend” analysis at two crucial stages: before and after forecasting.

Pre-forecasting validation: Before generating forecasts, Time2Vec is used to analyze historical data. The vector representations created by Time2Vec capture intrinsic temporal patterns, facilitating the comparison of trends across different products, regions, or time periods. This process allows data scientists to verify if time series that are expected to be similar actually appear similar in the embeddings space and identify clusters or cohorts of time series with similar behavior patterns.

Post-forecasting validation: After forecasts are generated, Time2Vec can be applied again to the forecasted data. This post-forecasting validation facilitates a comparison of forecasts by allowing the identification of products or time series for which the forecasts look similar. It also enhances explainability by comparing the embeddings or cohorts of forecasted data with those of historical data to validate whether time series that were similar in the past are predicted to behave similarly in the future. Finally, this allows a consistency check, assuming that past and future of similar products evolve in a consistent manner.

Because of Time2Vec, aiCast can provide examples of similar historical trends or related time series that support a specific prediction. Additionally,Time2Vec makes it possible to identify clusters and cohorts that exhibit similar performance patterns across both historical and forecasted data. This contextual information helps stakeholders further understand the basis for the forecast and increases confidence in the results.

Exploring the possibilities for demand forecasting with explainable AI

As we’ve explored throughout this whitepaper, explainability is not just a feature – it’s the foundation of any modern AI forecasting solution.

To ensure you’re getting the most out of your AI forecasting today – whether it's an in-house system or provided by a vendor – ask yourself these critical questions:

- How does your solution break down the components of a forecast? Can it clearly show the impact of trends, seasonality, and recent data on predictions?

- What methods does your AI use to handle and explain the impact of external factors? How are elements like promotions, weather, or economic indicators incorporated and explained in the forecast?

- Can your solution provide comparative analysis across different products or regions? Is it possible to understand how similar items or areas perform relative to each other?

- How does your AI validate its predictions? Are there mechanisms in place to compare forecasts with historical patterns and ensure consistency?

- What level of granularity does your solution offer in its explanations? Can stakeholders drill down into specific factors influencing individual SKUs or time periods?

The answers to these questions will reveal the depth of explainability in your current or potential AI forecasting solution. Remember, true explainable AI doesn’t just provide accurate predictions – it offers insights that drive informed decision-making and trust.

As you evaluate your forecasting needs, consider how Ikigai’s aiCast can transform your approach to demand planning. By combining cutting-edge AI with unparalleled explainability, aiCast helps your team move beyond mere prediction to gain actionable insights based on a true understanding of time series data.

Learn more

Ikigai’s forecasting solution, aiCast, is available through the Ikigai platform, and can be accessed via APIs.

To learn more about how probabilistic solutions are helping financial services organizations see better outcomes, visit Ikigailabs.io or schedule a call with an Ikigai representative to see Ikigai in action.

Download PDF version of this eBook here.